If you think that workers relies heavily on Generative AI, they may be less ready to deal with questions that cannot be easily answered by the machine, you may be fine.

A The recent paper Microsoft and Carnagi researchers in a recent self -reported study tried to study the effects of productive AI on “academic effort” by workers. In this, this article states, “Used incorrectly, the result of technologies can lead to a disorder of academic faculty and the result is that it should be preserved.”

From 319 total workers who use Generative AI tools for work at least once a week, studying 936 applicable “Real World Geni Toll Examples”, sharing participants on how they perform their work. The study has confirmed that critical thinking was primarily deployed to confirm the quality of work, and as the confidence in the tools of AI increased, the critical thinking efforts were reduced.

End of paper:

“For this purpose, our work shows that Geni tools need to design their awareness, stimulation and ability to support the critical thinking of knowledge workers by removing their awareness, stimulation and ability.”

Since this dissertation is based on the reports of 319 workers, this study operates in itself. In this way, some obstacles presented themselves in the procedure. For example, this article states that some participants will with less important skills will face the complexity of work and consistency.

Effectively, if the AI-inflic reaction satisfies the user creator, it created self-thinking that they did not criticize as much as they feel more complicated. At the same time, such qualities like critical thinking and satisfaction are in nature.

“In future work, the dimension of the AI -use samples and the impact of their impact on the process of critical thinking will be beneficial.”

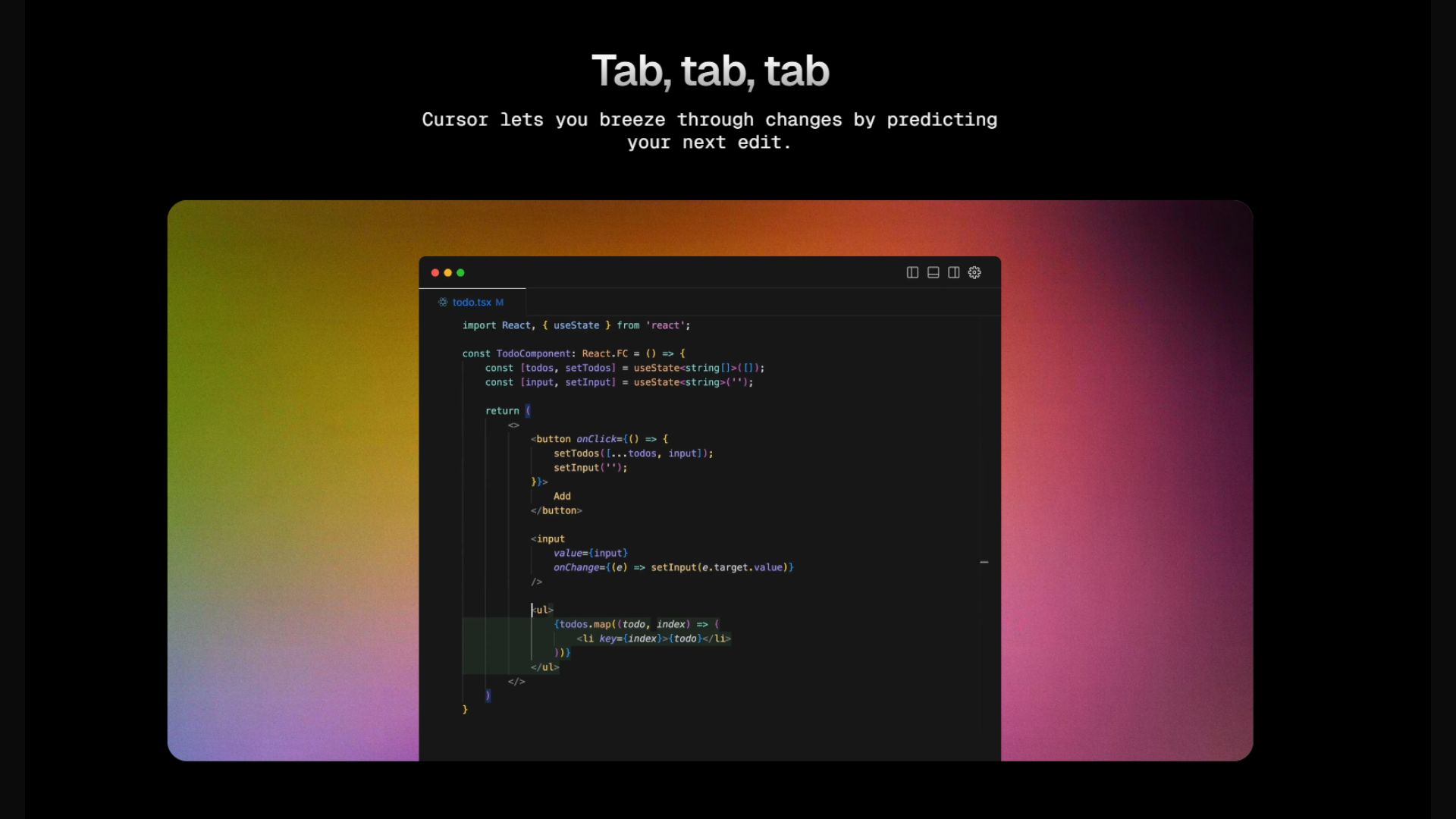

However, it has been concluded that more confidence in the Generative AI tools can lead to less critical thinking around its consequences. Cursor, recently AI -based code editor Responded to an application Of the more than 750 lines of the code, pointing out that the user may not generate this amount because it is “completing your work” (via via ARS Technica) It further explains:

“Creating a code for others can reduce dependence and learning opportunities”.

The user who reported this ‘problem’ immediately replied, saying “, is not sure if the LLM knows what they are for (lol).” The original user responded to a comment, saying that their original prompt for the cursor “Continue to produce just the code, so continue, until you target the closure tags, continue to prepare the code”.

However, the ‘logic’ behind the cursor response is meaningful in the light of this recent article. If someone is asking Coder AI to prepare hundreds of lines, and it is said that problems are sustained with this code, when you do not know what is the first place, it will be very difficult to find the problem.

Basically, AI-generated tools are incorrect. They are created by scraping data (some of which as copyright), and are suffering from fraud thanks to a crowd of information. They can be wrong, and often, and may be wrong, and it is important that the information you provide when using you.

As this study suggests, if a workflow is implemented, AI tools have to be examined to avoid losing the critical capabilities needed to do such a wide range of data.

As the dissertation is reported, “Geni tools are being prepared permanently, and in the ways that knowledge workers interact with these technologies are likely to change over time.” For these AI tools, the next step is to hinder admission, and step back for human creativity. Well, maybe I really mean ‘hope’.