When Sony’s masterpiece appeared on the PC 1 Shast PC two years ago, I hoped that it would become a water shedding moment for the history of the console ports. Well, it was, but for all the wrong reasons – buggy and unstable, he didn’t like your CPU and GPU, and most of all, he tried to eat more worm than your graphics card. It is fair to say that the water shed moment of Tlow 1 has not done enough to the entire ‘8GB of Varam’ 8GB.

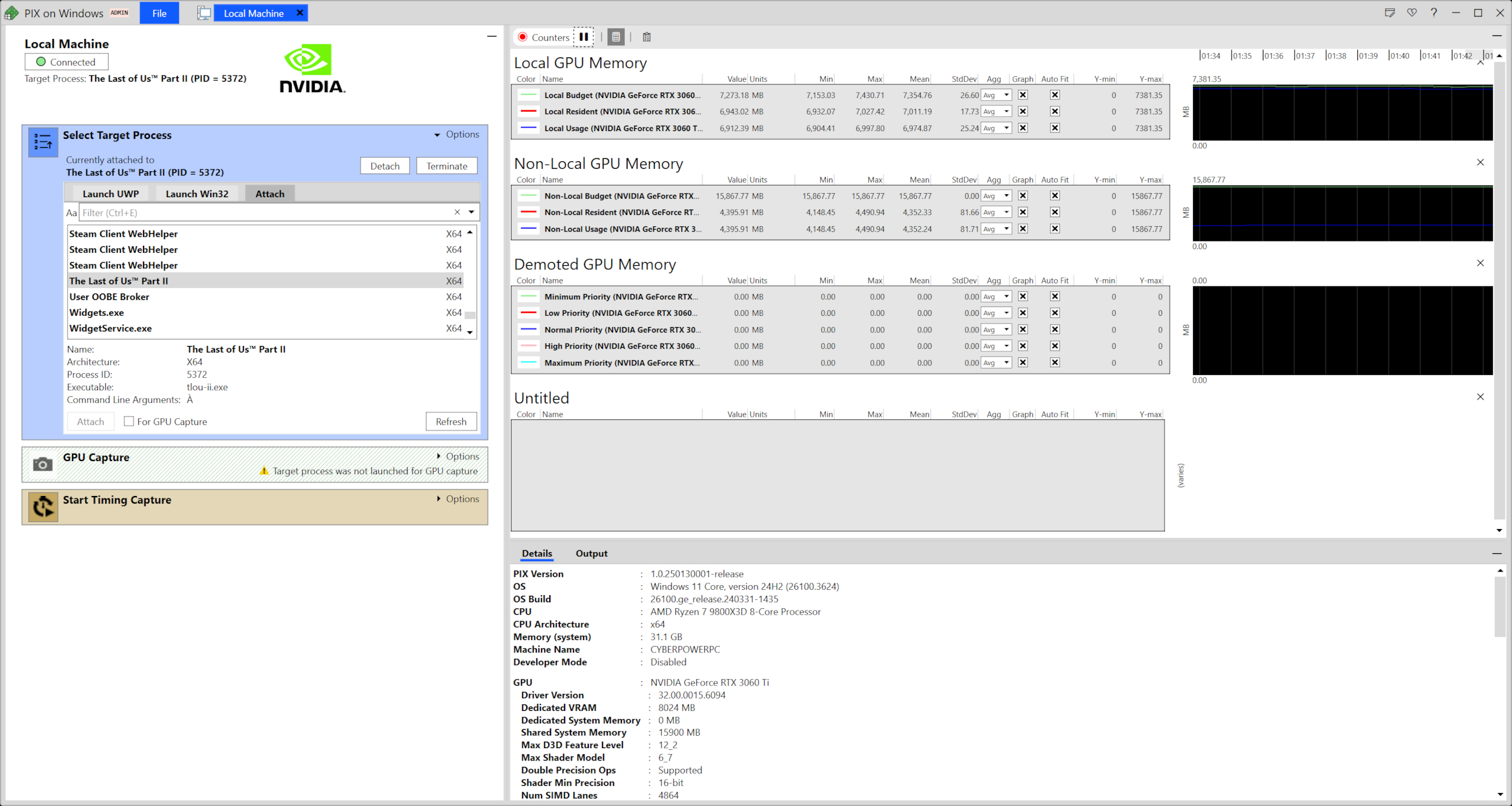

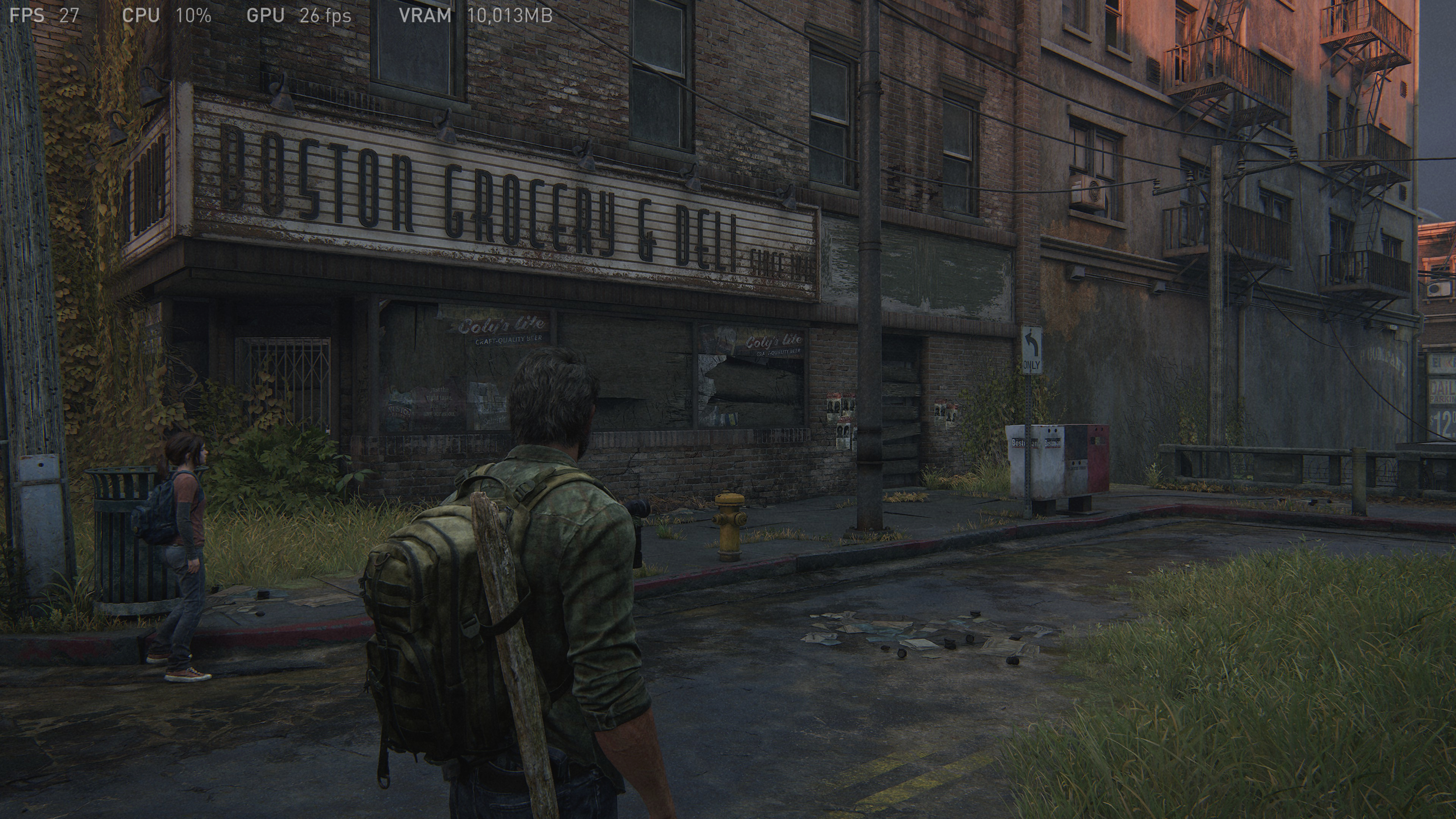

Most of these problems were eventually solved through a series of patchs, but like many big budgets, meg graphics games, if you fire it on 4K on ultra -settings, the game happiness will allow you to use more waram more than really. The TLO1 screenshot below is from the test rig using an RTX 3060 TI, which has an 8GB memory, showing the built -in performance HUD. I have confirmed that the use of Ram with other tools and the game is really trying to use the 10GB of edema.

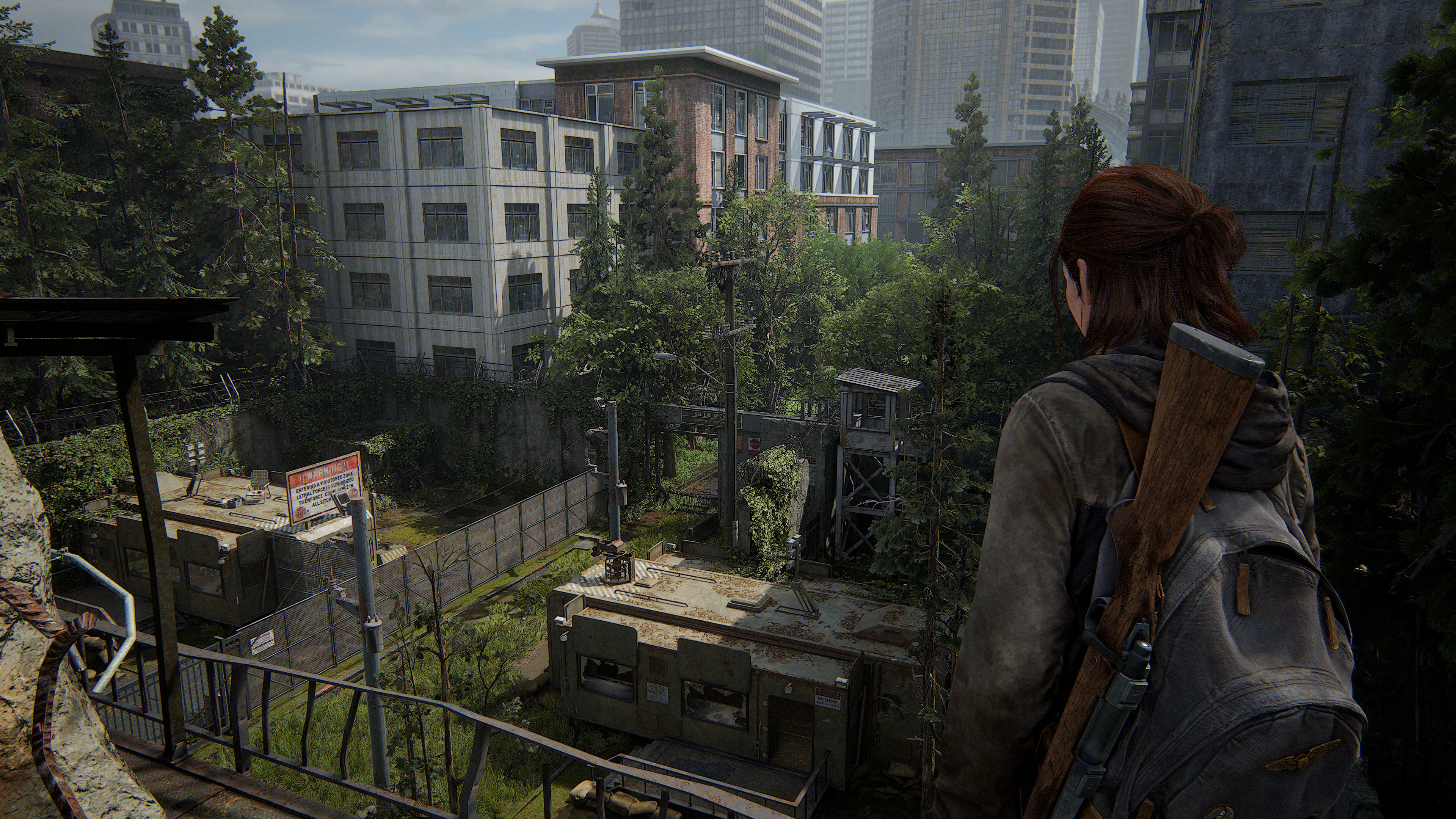

So when I started checking our last part 2 a few weeks ago, the first thing I monitored after developing a coffee part of the game was the amount of graphics memory he was trying to allocate and use. LI to do this, I used Microsoft Pixes on WindowsFor developers, a device that allows them to analyze in terms of threads, resources and performance, can analyze what is happening under their game hood.

Surprisingly, I discovered two things: (1) Tlou2 does not eat much of the VRAM as part 1 has done and (2) the game almost always uses 80 % to 90 % of GPU’s memory, regardless of what resolution and graphics settings are being used. You may know that it is a bit difficult to believe, but there is some evidence for you.

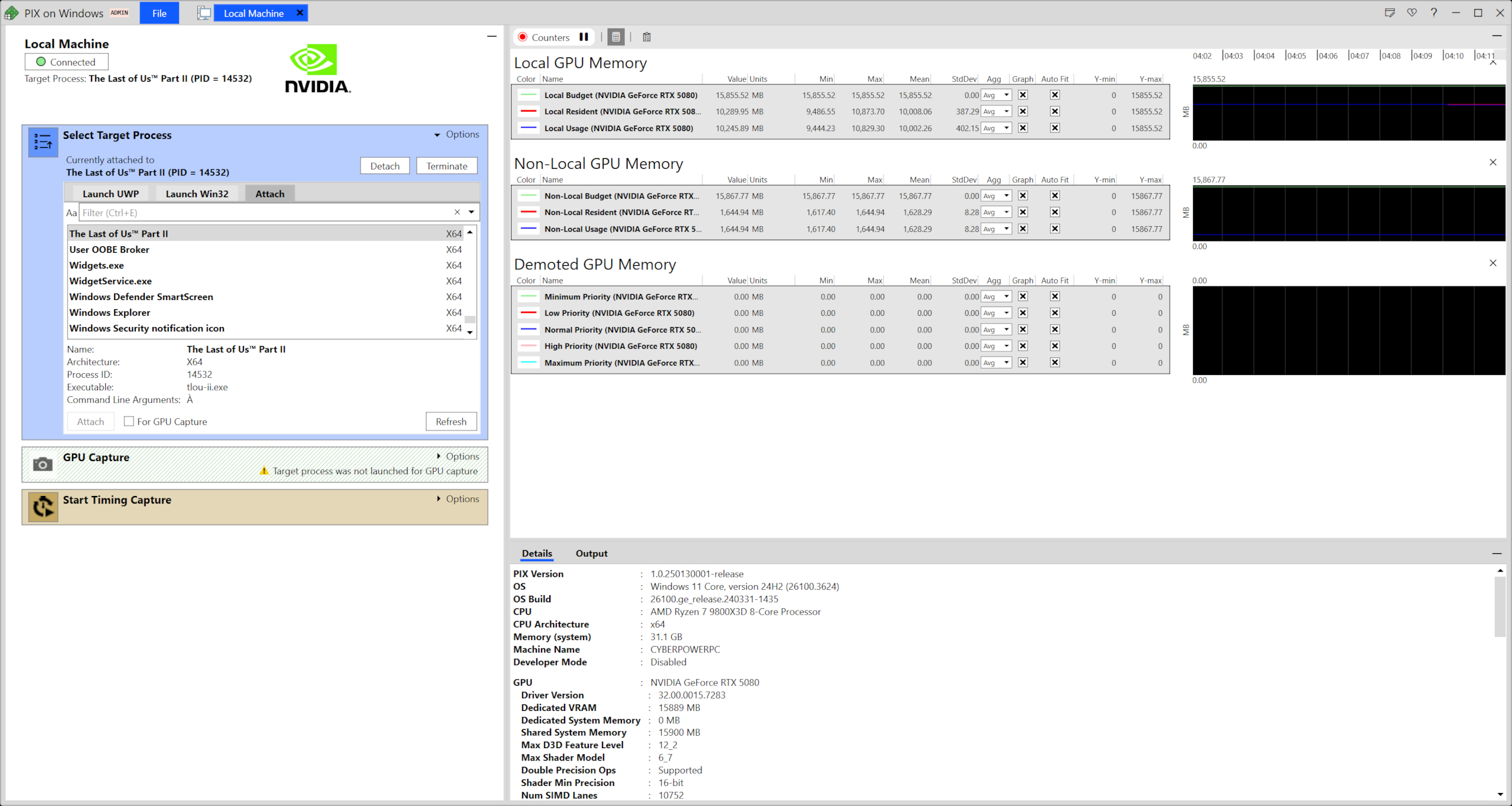

Screenshots below the Pix are being used in TLO2, local and non -local memory quantity, a cyber power PC Rising 7 9800x3D vein, using RTX 5080 and RTX 3060 TI graphics card. The former has 16 GB, while the latter has 8 GBVRAM. In both cases, I played the game on 4K using the maximum quality settings (ie very high preset, as well as the highest field of 16 x anisotropic filtering and view), as well as DLA and frame generation active (DLS for 5080, FSR for 3060 TI).

Note that in both cases, the amount of local memory Used Each card does not exceed the actual quantity of Ram – though they are both running with the same graphics settings. Of course, there is any game like this Should Handle the memory but after the defeat of Tlou1, it is nice to see that all this is resolved for 2.

If you look carefully at PIX screenshots, you will see that RTX 3060 TI RTX uses more than non -local memory than 5080, especially 4.25 GB vs. 1.59 GB. Non -local, for example, the system refers to memory and what is using this part of Ram for GPU is the asset streaming system of the game. Since there is only 8GBVRAM in 3060 TI, streaming buffer must be larger than the RTX 5080.

During the gameplay loop I performed this information to collect this information, RTX 5080 used an average of 9.77 GB of local memory and 1.59GB of non -local use, a total of 11.36 GB. In the case of RTX 3060 TI, the figures were 6.81 and 4.25 GB respectively, of which a total of 11.06 GB.

Why are they not exactly the same? Well, 3060 TIFSR was using the Frame Generation, while 5080 DLSS Frame was running, so a few hundred MB of memory between the two cards can be partially explained. The second possible reason for the difference is that the gameplay loops were not the same, so for recording, both setups were not making the same assets at all.

It’s not that really makes a difference, as I am making that TLO2 is an example of a game that is handling the VRAM properly by trying not to load GPU’s memory with more assets with more assets. This is what should do all the big meggraphics games of AAA and the obvious question of asking here, why are they not?

Well, another aspect of what I have supervised can tell why: CPU workload scale. One of the test veins that I used in my last Part 2 Remistor’s performance analysis was an Old Core i7 9700K with Radian RX 5700 XT. Intel’s old coffee leak refresh CPU is an eight -core, eight thread design, and it doesn’t matter that the settings I used, the use of CPU core at all times was at 100 %.

Even in the cyber power PC test rig, the rise 7 9800x3d was also filled with a lot of use, its sixteen logical core (ie eight physical cores are handling two threads) – not to the same extent but not like 9700K but more than any game I have experienced late.

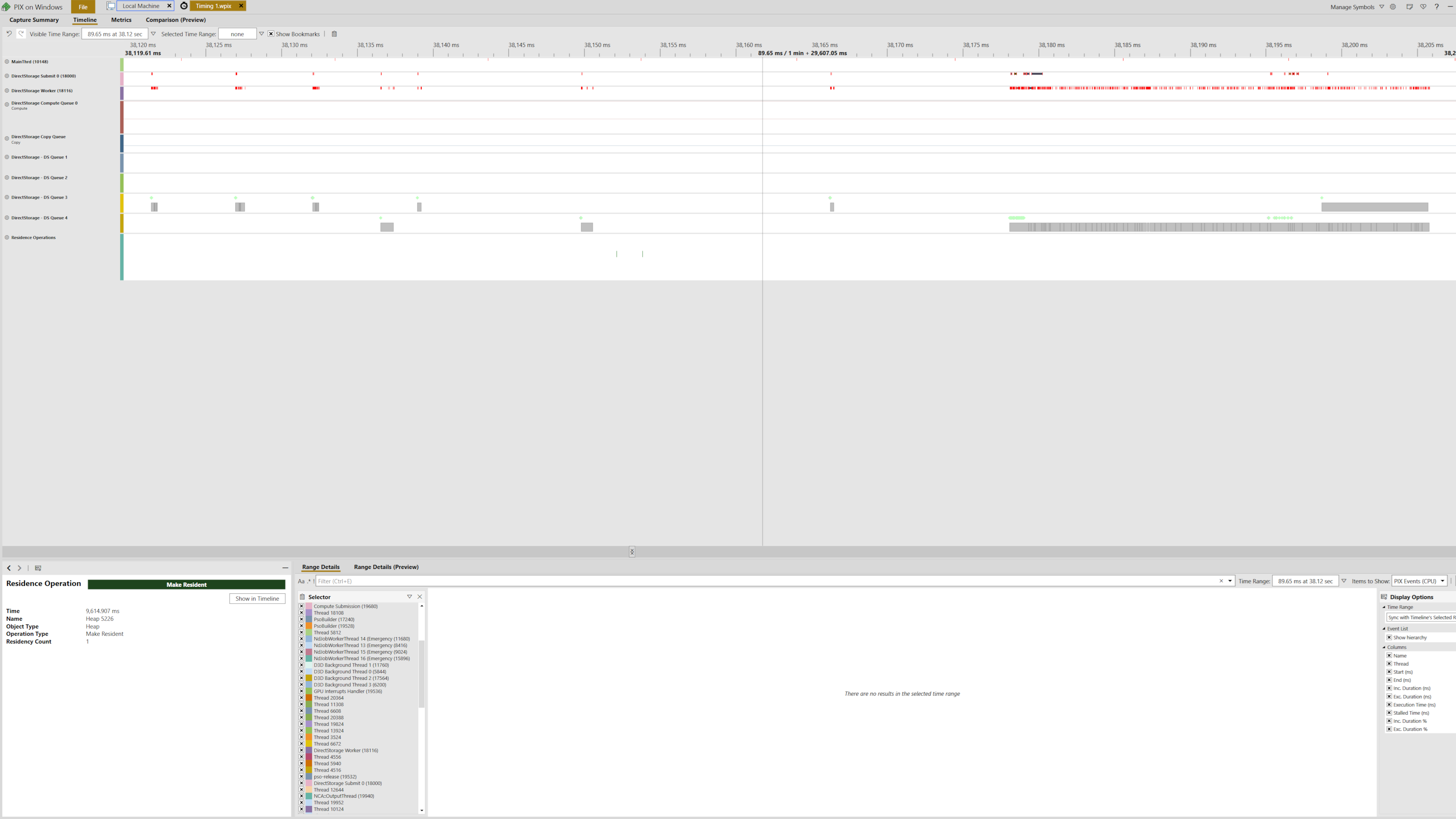

The TLO2 produces a lot of threads to manage different tasks, such as issuing graphics command and setting a shedder, but there are at least eight threads that are dedicated to direct storage tasks. At this point, I rush to add that all modern games produce more threads than your generally notice, so there is nothing remarkable about the number that is using TLO2 for direct storage.

The aforementioned pix screenshot is worth 80 ml of these specific threads, which is priced rendering (mainly a handful of frames) and while many threads are useless in this period, two direct storage rows and direct storage collectors are relatively busy or busy.

Given that it is not possible to disable the use of direct storage and background shader compilation in Tlou2, it is difficult to tell how the work burden plays a vital role in the heavy demand for CPU time, but I suspect none of this is trivial.

… although this is not a flawless technique, it does a great job of achieving any VRAM limits

However, I acknowledge that the biggest programming challenge is to simply and properly integrate all this work with basic threads, and possibly most large game developers leave it to the last user to worry about the use of VRAM instead of creating assets management system such as TLO2.

The last part of us, like many other sports, includes indicators of VRAM use in the graphics menu for sports and it is relatively easy to implement, though it has to be 100 % correct. Is More difficult than you think.

In danger of coming as a flime byte, let us consider for a moment whether the TLO’s asset management system is ‘a special answer to the VRAM’s 8 GB’. In some ways, 8 GB Is Coffee memory because I could not run because of it in TLO2 (and I have experienced many different fields, settings and PC structures to confirm it).

Just as in the last part of the American Part 2, any game will need to stream more assets across the PCI bus on 8GB graphics cards in the same task, but if it is handled properly, it should not affect a remarkable degree. The relative low performance of RTX 3060 TI has nothing to do with the amount of RAM, but instead of shaders, TMUs, ROPS and memory bandut instead.

If you have just read, you are heading towards the comments section to fill different YouTube links that show my way to TLO2 in my way or run in other performance problems on graphics cards with 8 or less GB of VRAM. I am definitely not going to say that the pieces of these analysis are all wrong and I am the only one that is right.

Anyone who has been in PC gaming for a long time will know that the PC is very different – in terms of hardware and software formation and environment – that anyone can always eliminate a lot of different experiences.

Justin, who reviewed the last of the American Part 2, used the Core i7 12700F with RTX 3060 TI, and joined the performance issues at 1080p with the medium preset. I used a rise 5 5600x with the same GPU and there was no problem. Two quite similar PCs, two very different results.

Dealing with such a thing is one of the biggest headaches that have to deal with the PC game developers, and this is probably why we do not see the TLO2’s cool asset management system in heavy use-guessing that every possible PC that runs this game is a very time-consuming way. This is a shame because although it is not a flawless technique (it occasionally fails to pull the assets at the right time, for example, causes something closely equipped), it does a great job of achieving any VRAM limits.

I’m not suggesting that 8GB is enough Term Since this is not – because the ray tracing and nerve rendering become more common, and the ability to run the ANPC on the GPU, the amount of GPU’s ram will become an important commodity, for example. Assets series are also effectively useless if your entire theory in the 3D world is full of items and hundreds of ultra -complex materials because these resources need to be in and there at the VRAM at this time.

But I hope that some game developers take the last notice of our last part 2 and try to enforce something similar as 8 GB graphics cards and mobile chips have been developed with both AMD and NVIDIA (though in the admission level sector, though), it will still continue to be a limited vram.