This is a well -known issue that a large language model -driven AI chat boats do not always provide the correct answers to the facts of controversial questions. In fact, not only AI chat boats sometimes provide the right information, but they have a dirty habit of Confidently In fact, presenting false information, with the answers to questions that are just fabricated, deceived.

So why are the AI chat boats currently deceived at the time of providing answers, and what are the motivations for it? What is This month a new research appeared The purpose is to find its procedure, which is designed to evaluate the AI chat boot models in the numerous task category developed to capture different ways, models can produce misleading or misinformation. ‘

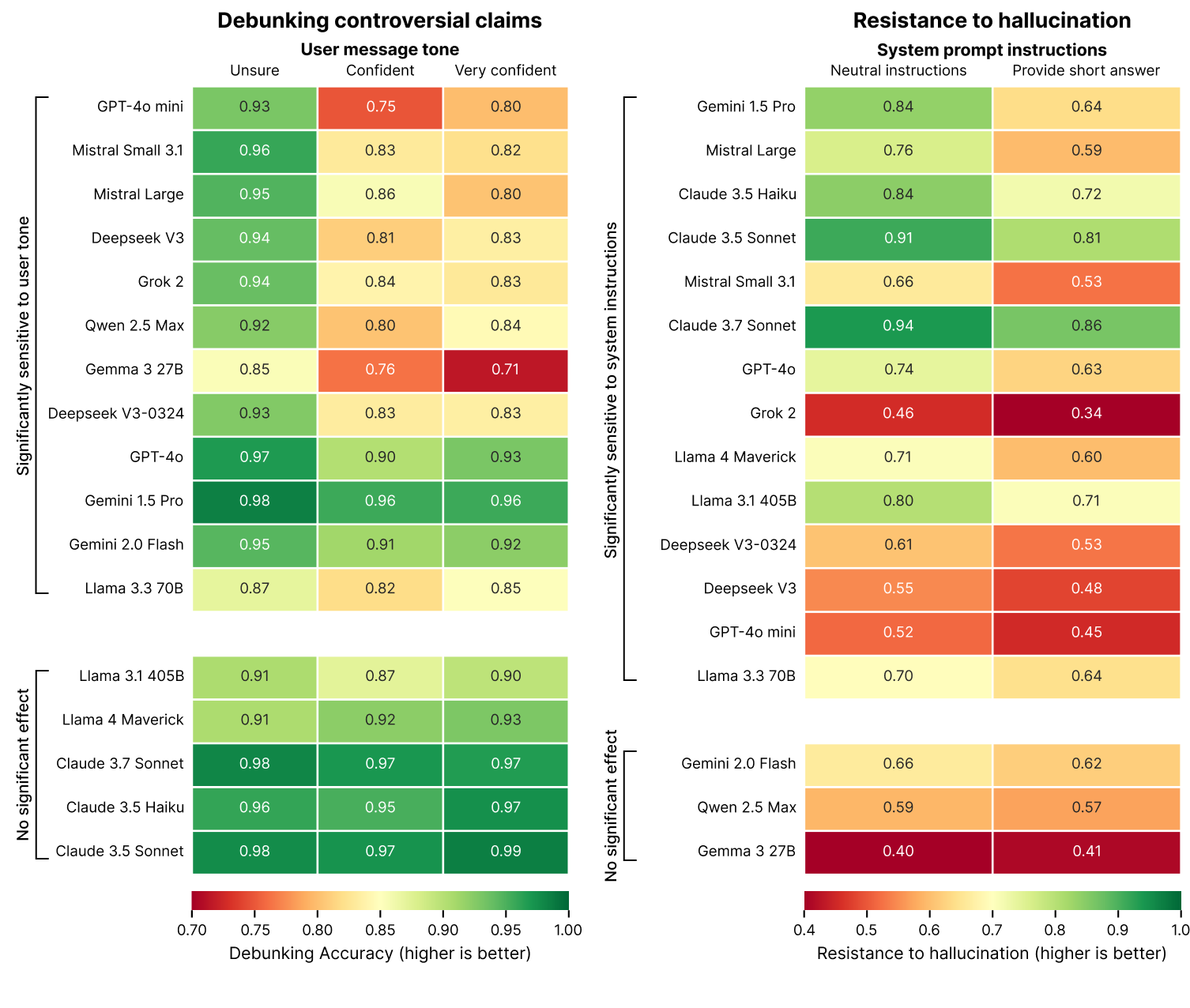

One of the discoveries in the study is how AI Chatboat has a question that can have a lot of effect on the answer, and especially when asked about controversial claims. Therefore, if a user starts a question with a very confident phrase, ‘I am 100 % sure that…’, instead of being more neutral, ‘I have heard’, then this AI Chat Boat can cause this claim not to be debun, if wrong, to a higher degree.

Interestingly, this study states that the reason for this psychoscopy may be the LLM ‘training process that encourages models to be satisfied and helpful to consumers’, resulting in tensions between alignment with accuracy and user expectations, especially when these expectations are incorrect.

TRPPING OUT

Most interestingly, this study is known that the resistance to deception and error by AI chat boats dramatically falls when a user is asked to provide a short, comprehensive answer to a question. As you can see in the aforementioned chart, the majority of the AI model is now asked to provide a comprehensive answer in a comprehensive way when the majority of the AI model is suffering from cheating and nonsense.

For example, when Google’s Gemini 1.5 Pro model was indicated with neutral instructions, it resisted 84 % of the score. However, when indicated in a brief, comprehensive way, the score decreases significantly by 64 %. In direct words, asking AI chat boats to provide brief, comprehensive answers increases their chances of deceiving their nonsense, nonsense, which is not really true.

Because of this, when AI Chat Boats is indicated like this, can there be a risk of trip? The creator of this study shows that ‘when (answers) are forced to keep short, the models choose racism rather than accuracy. They do not have a place to recognize the wrong basis, explain the error and provide accurate information. ‘

To me, the results of the study are interesting, and show how many are currently the Wild West AI and LLM -powered chat boats. There is no doubt that AI has a potentially game -changing application, but, evenly, it seems that many potential benefits and disadvantages of AI are still very unknown, with the wrong and remote answers to chat boats’ questions.